Accelerating seismic data access: reshaping seismic data into metadata

Seismic data management is facing continuous challenges with growing amounts of data while traditional storage technologies are not equipped to handle that volume with evolving workloads. Seismic data stored in a standard file consisting in a set of headers and wiggle traces, a trace being a time-series of waves’ amplitudes reflecting in the Earth. A seismic survey translates into hundreds of terabytes where existing parallel file systems on rotating media and SSDs today suffer from a number of issues causing performance degradations.

Closing the gap between memory and storage is possible using the latest byte-addressable technologies as 3DxPoint and object store like the Distributed Asynchronous Object Storage (DAOS) library. DAOS is an open source software-defined scale-out object store that provides high bandwidth, low latency, and high IOPS storage containers. Unlike the traditional storage stacks, DAOS is extremely lightweight since it operates end-to-end in user space with full OS bypass. Its design shifted from files and directories hierarchy into objects and containers, avoiding POSIX bottleneck. It interfaces the persistent storage with a direct translation of user-visible object model into the underlying storage infrastructure and can be viewed as the next generation of parallel file systems.

Reshaping seismic data into metadata, maintaining both geometry and data access per trace, perfectly matches with object storage. Object storage is not widely used by the oil and gas industry as a standard parallel file system. We propose a new formulation of seismic format using DAOS which requires minimal to no changes to user applications and illustrate it with some preprocessing examples.

Introduction:

Geophysics exploration and seismic data handling techniques are facing the curse of the continuously growing data challenges. Narrowly, focusing on traditional data storage strategies quickly become obsolete especially with seismic data. Seismic data stored in standard formatted files; consists of set recorded wiggle traces. Traces describes echoes from interfaces between subsurface layers because of differences in geological properties. Each trace is a time-series record amplitude of reflections of waves initiated by a particular source. Amplitudes of wiggles reflects the variance of change between sub-surfaces’ constituting elements.

Geological objectives, acquisition equipment, spatial and vertical resolutions, wave-field recording, and sampling redundancy are the key acquisition attributes, governing data volumes, improving data quality and reduce gathered noise. Changes to any of these attributes has a significant implication on how acquisition done and the data out of this process. These aspects allow better exploration of sub-surfaces layers based on subsets of wave-fields.

Geological features of a model of interest in hydrocarbon exploration is a 3D in nature, while a 2D section is only cross-section. A typical land or marine 3D survey in range of few hundred thousand to few million traces; usually more than 105 traces per square kilometer. These numbers can be translated, per modern surveys, into a few hundred terabytes per single line to petabytes with multiple lines.

Sub-surfaces reconstruction and migration techniques are based on intensive computation, to extract reliable information, of the recorded seismic data-sets. To reconstruct an acoustic image of a model, limited area of the underlying sub-surfaces, a complicated distorting grid of diffraction points is formulated out of a migration technique, usually governed by the propagation of wave propagation equations, with a priori estimated velocities.

Theoretically, waves propagate to infinity or continue until vanishing. But this is not applicable in modeling a particular region because of the computational resources’ limitations. Usually, modeling seismic data is embarrassing parallel and big portion of its performance controlled by data handling techniques.

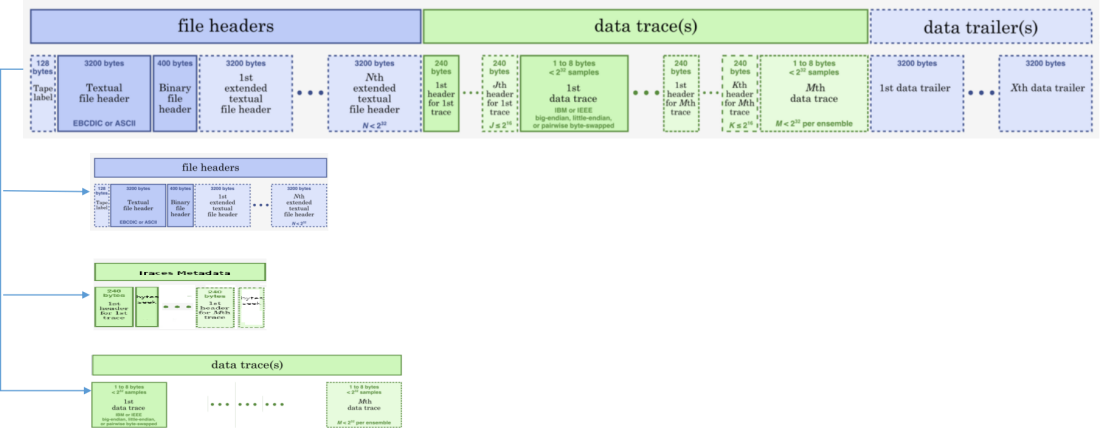

Seismic data is growing exponentially with the advance of acquisition techniques. Therefore, accommodating data explosion out of all of these attributes over long-terms needs a strategical shift in both data formulation and management. Typical file-based parallel file-system is not more the best choice for seismic data, since it could not bring data close enough to processing node at the appropriate rates. Moreover, standard seismic data files, like widely used SEG-Y, suffer from IO alignment issues (as shown in Fig 2) causing performance degradation. Storage block misalignment causes performance degradation, degradation is variable but depend mainly on the underlying storage system hence mapping of logical blocks that are not at the start of physical blocks.

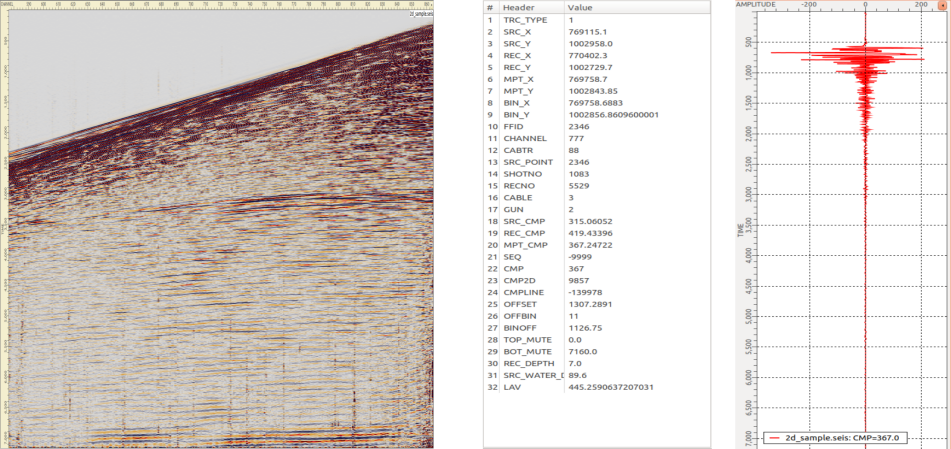

Generally, seismic data consists of two main components (as shown in Fig 1); trace header which describe acquisition geometry and time-series of data samples. Reshaping data into metadata, maintaining both geometry and data access per traces, side by side with data samples perfectly matched with object storage (as shown in Fig 2). So, moving to asynchronous object storage offers the most appropriate way capable handle such massive amount of seismic data with a perfect alignment with most of the preprocessing and migration approaches. Yet, Object storage is not widely used by industry as a standard parallel file system.

Right now, data and IO oriented issues represent main challenges facing geophysicists. Fortunately, this kind of mutation of data offers the flexibility that directly translated into data utilization with the exact same functionality of the original format.

Over the last couple of decades, a number of projects addressed parallel files systems challenges generally; and a very few customized for seismic data management issues. Designs always aim to provide, at least one of, main features like high availability, fine data granularity, high concurrency access or versioning control facing challenges of existing memory and storage solution cost.

Closing the gap between memory and storage even more bringing data near processing elements, with the latest byte-addressable technologies like Intel 3DxPoint, placed object storage as the future building blocks of the HPC parallel storage systems. DAOS (Distributed Asynchronous Object Storage), Exascale IO stack, incorporated with such a new hardware; offering performance requirement demanded by scientific applications generally and suits perfectly OG demands. Its design shifted from files and directories hierarchy into objects and containers; avoiding POSIX bottleneck and the extensive availability of NVRAM and SSD per compute nodes. It interfaces persistent storage with a direct translation of user-visible object model into the underlying storage infrastructure. With features offered by DAOS, it can be viewed as the next clean generation of traditional parallel file systems.

In this work, we propose a new formulation of tradition seismic data files format that require minimal changes to the original processing, migration and simulation techniques. Moreover, illustrate real potential of data reshaping with two of the most commonly used preprocessing techniques; CMP sorting and muting kernels.

Approach overview:

The idea is to mitigate the issue of the under-utilization of processing element, due to I/O bottlenecks and populating memory at different hierarchy levels with unnecessary objects, by splitting acquisition geometry from data samples with proper access techniques. Our approach that solve both issues respectively introduced as well as new optimization opportunities.

- Seismic data mutation: shift directories and stream of bytes seismic data files into containers and object model with aligned physical data blocks. Data accessed by migration and simulation techniques in the exact way that DAOS handle data in. While DAOS uses metadata stored in key-value pairs to construct data hierarchy, migration approaches read chunks of data using geometrical information of wiggled traces to process it. So, mapping data layout and user-defined blocks between seismic traces and DAOS; simplifies object processing by most of OG techniques.

- Contiguous chunks data processing: data layout can take many forms starting from data samples traces, fits with complex migration techniques like RTM and FWI, and ending with blocks spanning a number of timesteps per set of contiguous traces which perfectly match many preprocessing techniques like Mute.

- Redundancy & processing stages versioning: processing petabytes of data with replicas pre/processing stage make space limited and expensive. Versioning subsets of data, particular set of arrays/objects, per processing stage eliminate the need to replicate complete data sets with traditional parallel file systems which increase throughput of different techniques.

- On-storage light-weight processing procedures: seismic data rearrangement is a cheap sorting technique while it is mandatory for a number of migration approaches. Transfer data into processing nodes to perform this kind of sorting is costy in terms of data transfer and storage as it end-up with a resorted copy of the data. This is not the case with DAOS, playing with metadata is trivial and doesn’t require neither data transfer nor data copy.

Preliminary results & findings:

In our preliminary implementation, we adopted DAOS with an initial data layout such that each trace is an object with a DAOS array metadata under a single distributed key contains set of range keys per header elements and single/multiple range keys per data samples based on the target processing approaches. Before going with the full implementation of DAOS, we benchmarked the proposed seismic data reformulation with common-depth-point (CDP) and common-midpoint sorting (CMP), one of the most widely used seismic preprocessing techniques.

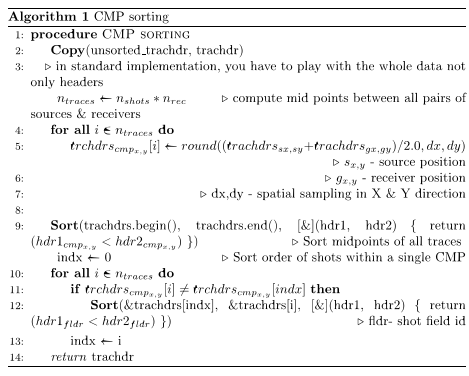

Multifold seismic data usually stored in source-receiver arrangement while many of the conventional processing done in midpoints order. So, CMP gather achieved geometry coordinates transformation (as shown in Alg. 1). CMP and CDP are equivalent on horizontal reflectors with lateral velocities; data access pattern will not vary in both cases. In our initial proof, we implemented CMP gathers to show potential of only splitting metadata and traces samples.

BP 2004 model used in benchmarking our techniques on an Intel 2-socket server (CascadeLake, 40 cores @ 2.5 GHz) with Intel compiler 2019u3. This dataset consists of 1348 shots with total number of traces equals 1535190 trace. Multithreaded CMP sorting takes ~0.9 sec and ~0.4 sec for metadata IO operations.

Sorting same data with traditional approaches takes a considerable amount of time however it was not enough. So, shuffled and replicated BP dataset traces to match a typical multiline acquisition of 8 streamer per shot of 1k receiver and 5k samples per trace. A single line covers about 20km with 25m spacing between shots, doing the math it ends with 128TB per line and 3.8PB in total for 30 lines. A multithreaded CMP sorting in this case takes in average ~4:30 min and ~1:30 min for asynchronous data IO operations. In both case there is no need to rewrite sorted traces, only write a resorted metadata with an appropriate access to the original data. Moving to a complete DAOS map will offer optimization opportunities that would not possible with traditional approaches.

References:

- A. J. “Guus” Berkhout, “Changing the mindset in seismic data acquisition,” The Leading Edge, vol. 27, no. 7, pp. 924–938, Jul. 2008.

- G. T. Schuster, Seismic Inversion. Society of Exploration Geophysicists, 2017.

- D. Goodell, S. J. Kim, R. Latham, M. Kandemir, and R. Ross, “An Evolutionary Path to Object Storage Access,” in 2012 SC Companion: High Performance Computing, Networking Storage and Analysis, 2012, pp. 36–41.

- J. Lofstead, I. Jimenez, C. Maltzahn, Q. Koziol, J. Bent, and E. Barton, “DAOS and Friends: A Proposal for an Exascale Storage System,” in SC16: International Conference for High Performance Computing, Networking, Storage and Analysis, Salt Lake City, UT, USA, 2016, pp. 585–596.

- P. Braam, “The Lustre Storage Architecture,” arXiv:1903.01955 [cs], Mar. 2019.

- M. Factor, K. Meth, D. Naor, O. Rodeh, and J. Satran, “Object storage: the future building block for storage systems,” in 2005 IEEE International Symposium on Mass Storage Systems and Technology, 2005, pp. 119–123.